WORLD

Taylor Swift: A Journey of Reinvention, Ownership, and Cultural Influence

Few artists have reshaped modern music and pop culture the way Taylor Swift has. From her teenage country beginnings in Nashville to her current position as one of the most influential entertainers on the planet, her career has been a masterclass in artistry, business acumen, and storytelling. Her rise to fame is not only about chart-topping hits but also about resilience, reinvention, and taking control of her creative and financial future.

Early Beginnings: The Nashville Dream

Born in Pennsylvania, Swift moved to Nashville at a young age, determined to break into the country music industry. Signing with Big Machine Records, she released her self-titled debut album that blended heartfelt songwriting with traditional country melodies. Tracks like “Tim McGraw” and “Teardrops on My Guitar” instantly made her a household name, proving that she was more than a passing teen sensation.

Her follow-up album Fearless solidified her reputation. Songs like “Love Story” and “You Belong With Me” bridged country and pop, earning her critical acclaim, multiple awards, and her first Grammy for Album of the Year. These early records showcased her gift for autobiographical storytelling, a skill that would continue to define her career.

Crossing Over: Pop Stardom and Global Recognition

By the time Red and 1989 were released, Swift had fully transitioned into mainstream pop. The 1989 era, filled with hits like “Shake It Off” and “Blank Space,” propelled her to global superstardom. Collaborating with producers like Max Martin and Shellback, she mastered the formula for pop anthems while retaining her lyrical depth.

1989 earned her another Album of the Year Grammy, cementing her as a genre-defying artist who could dominate both country and pop markets. During this period, she also leaned into elaborate music videos, bold fashion reinventions, and highly publicized collaborations that expanded her influence beyond music.

Reputation, Reinvention, and Image Control

When Reputation arrived, Swift took a darker turn. The album explored themes of media scrutiny, betrayal, and reinvention. Songs like “Look What You Made Me Do” introduced an edgier persona, signaling her ability to adapt and respond to cultural narratives surrounding her. Despite initial criticism, Reputation sold millions worldwide and demonstrated her resilience in an industry that often thrives on tearing artists down.

Folklore, Evermore, and the Return of Storytelling

The 2020 pandemic brought another reinvention. Working closely with Jack Antonoff and Aaron Dessner, she released Folklore and Evermore, two sister albums that showcased indie-folk and alternative sounds. These records shifted focus from autobiographical tales to fictional storytelling, exploring characters, narratives, and emotional landscapes.

Songs like “Cardigan” and “August” received critical praise for their lyrical complexity. Folklore went on to win the Grammy for Album of the Year, proving that even in unexpected genres, she could dominate both charts and awards.

Masters, Ownership, and Taylor’s Version

One of the defining chapters in her career has been her battle for ownership of her music. After Scooter Braun’s acquisition of Big Machine Records, Swift’s masters for her first six albums changed hands without her consent. In response, she began re-recording her catalog as Taylor’s Version.

This unprecedented move not only gave her financial control but also inspired a larger conversation about artists’ rights in the music industry. Albums like Fearless (Taylor’s Version) and Red (Taylor’s Version) have been embraced by fans, often outperforming the originals. By reclaiming her work, she redefined what it means to be an empowered artist in the modern era.

The Eras Tour and Cultural Impact

Swift’s Eras Tour became a global phenomenon. More than just a concert, it served as a retrospective journey through every stage of her career, from her country roots to her latest albums like Midnights and The Tortured Poets Department. The tour shattered records for ticket sales, streaming boosts, and cultural engagement.

Swifties, her dedicated fanbase, became an integral part of this experience. From trading friendship bracelets to creating viral social media moments, the fan culture surrounding Swift elevated her tour into a global cultural event.

Recent Releases: Midnights and The Life of a Showgirl

Her 2022 album Midnights blended synth-pop with introspective storytelling, delivering hits like “Anti-Hero” that resonated with both critics and audiences. The album quickly became one of her most commercially successful projects.

Looking ahead, The Life of a Showgirl has generated immense anticipation. With its glamorous aesthetic and theatrical undertones, the record signals yet another artistic reinvention. Fans expect it to blend bold visuals with intricate songwriting, showcasing Swift’s never-ending ability to surprise.

Awards, Records, and Influence

Over her career, Swift has broken countless records. She is one of the best-selling artists of all time, has earned multiple Grammy Awards, and continues to dominate streaming platforms. Her ability to consistently deliver chart-topping albums across genres is unmatched.

Beyond the numbers, her influence extends to cultural conversations about gender, ownership, and the role of music in shaping identity. By combining artistry with activism, she has become more than an entertainer—she is a cultural force.

FAQs

How many albums has Taylor Swift released?

She has released 11 studio albums, including re-recordings of earlier works labeled Taylor’s Version, with more on the way.

Why did she re-record her albums?

Swift re-recorded to reclaim ownership of her masters after they were sold to Scooter Braun’s company without her approval.

What is The Eras Tour?

The Eras Tour is a global concert series that takes fans through every stage of her career, celebrating her diverse discography.

What is her latest album?

Her most recent album is The Life of a Showgirl, which blends theatrical themes with her signature lyrical storytelling.

How many Grammys has she won?

Swift has won more than a dozen Grammy Awards, including multiple Album of the Year honors.

What is the Swifties fan culture?

Swifties are her devoted fans, known for their creativity, loyalty, and global community that drives much of her cultural impact.

Conclusion

Taylor Swift’s journey is more than a story of hit records—it is one of resilience, reinvention, and empowerment. From her Nashville beginnings to her bold moves in reclaiming her catalog, she has consistently shaped not just music but the business and culture around it. Her artistry continues to evolve, her fanbase only grows stronger, and her legacy as one of the most influential artists of her generation is firmly secured

WORLD

DSPy News & Updates: The Complete Guide to the Framework Changing AI Programming

DSPy News & Updates iThe traditional approach to building AI applications through manual prompt engineering s becoming increasingly unsustainable. As language models become more powerful, the complexity of crafting, testing, and maintaining effective prompts has grown exponentially. Enter DSPy (Declarative Self-improving Python) – a revolutionary framework from Stanford NLP that fundamentally transforms how developers build AI applications.

Unlike traditional approaches that rely on brittle prompt strings and manual trial-and-error optimization, DSPy treats language model programs as modular, composable pipelines that can be automatically optimized for specific tasks. This article provides comprehensive coverage of the latest DSPy news, core concepts, practical implementation guidance, and insights into why this framework represents the future of compound AI systems.

What is DSPy? Beyond the Hype: Programming, Not Prompting

DSPy is an open-source framework that introduces a declarative programming model for building sophisticated AI applications. Instead of manually crafting and tweaking prompts, developers define what they want their system to accomplish using modular components called signatures and modules, then let DSPy’s optimizers automatically discover the best prompting strategies and parameter configurations.

The Core Philosophy: Declarative Self-Improving Python

The name ‘Declarative Self-improving Python’ encapsulates DSPy’s fundamental innovation. Rather than imperatively specifying exactly how a language model should process information (through detailed prompts), developers declaratively specify the task’s structure and desired outcomes. The framework then automatically:

1. Generates optimized prompts based on your task definition

2. Bootstraps few-shot examples from your training data

3. Continuously refines the system through compilation and optimization

4. Adapts seamlessly when you switch between different language models

This self-improving capability means your AI system gets better as it processes more data, without requiring manual prompt engineering for each iteration or model change.

DSPy vs. Traditional Prompt Engineering: A Paradigm Shift

The differences between DSPy and traditional prompt engineering are fundamental, not superficial. Here’s a comprehensive comparison:

| Aspect | Traditional Prompting | DSPy |

| Approach | Manual trial-and-error with prompt strings | Declarative task definition with automatic optimization |

| Maintenance | Brittle, breaks with model updates or task changes | Modular, portable across models and easily updated |

| Optimization | Manual tweaking by developers | Automated through compilation with optimizers |

| Scalability | Difficult to scale complex multi-step pipelines | Naturally scales to sophisticated compound systems |

| Model Portability | Must rewrite prompts for different models | Automatically adapts to any supported LM |

From Brittle Strings to Structured Signatures

Traditional prompt engineering requires developers to carefully craft text strings that guide the model’s behavior. A single word change can dramatically affect output quality, and these prompts often need complete rewrites when switching models or adjusting task requirements.

DSPy replaces this fragile approach with signatures – declarative specifications that define the input-output structure of your task. For example, instead of writing ‘Given the following context and question, provide a detailed answer…’, you simply define: ‘context, question -> answer’. DSPy handles the rest, automatically generating optimized prompts that work reliably.

How DSPy’s Optimizers Automate the ‘Prompt Tuning’ Work

DSPy’s revolutionary optimizers are what truly set it apart. These algorithms automatically discover the best way to configure your AI pipeline through a process called compilation. Key optimizers include:

• MIPROv2 – Multi-prompt Instruction Proposal Optimizer that generates and tests multiple instruction variants

• BootstrapFewShot – Automatically creates few-shot examples from your training data

• COPRO – Coordinate Ascent Prompt Optimization for systematic improvement

• BetterTogether – Jointly optimizes student and teacher models for maximum efficiency

These optimizers work by evaluating your pipeline against your specified metrics (like accuracy or F1 score), then iteratively refining prompts, examples, and model configurations until performance targets are met.

Latest DSPy News & Key Updates for 2026

The DSPy ecosystem has experienced explosive growth over the past year, with significant advances in both the core framework and the broader research community. Here are the most important recent developments.

Recent Version Highlights: What’s New in DSPy v2.5

The latest major release, DSPy v2.5, introduces several groundbreaking features:

Enhanced Multi-Module Optimization: The framework now supports joint optimization across multiple modules in complex pipelines, dramatically improving end-to-end performance for sophisticated RAG and agent systems.

Advanced Telemetry and Tracing: New built-in observability tools provide detailed insights into how your DSPy programs execute, making debugging and optimization significantly easier.

Expanded Model Support: Native integration with the latest models from OpenAI (GPT-4, o1), Anthropic (Claude Sonnet 4), Google (Gemini 2.0), and improved support for local models through Ollama and vLLM.

New dspy.Retrieve Module: A unified interface for retrieval that works seamlessly with vector databases, traditional search engines, and hybrid approaches.

Performance Improvements: Compilation and optimization are now 3-5x faster thanks to improved caching and parallel evaluation strategies.

Spotlight: Breakthrough Optimizers – MIPROv2 and BetterTogether

Two optimizers have emerged as game-changers for DSPy practitioners:

MIPROv2 (Multi-prompt Instruction Proposal Optimizer v2) represents a quantum leap in automatic prompt optimization. Unlike its predecessor, MIPROv2 doesn’t just test variations of a single prompt template – it uses a meta-learning approach to propose entirely different instruction strategies, then empirically evaluates which ones work best for your specific task and data. Recent benchmarks show MIPROv2 achieving 15-30% performance improvements over manually crafted prompts on complex reasoning tasks.

BetterTogether introduces a novel approach to model collaboration It jointly optimizes a smaller ‘student’ model alongside a larger ‘teacher’ model, learning when to use each for maximum cost-efficiency without sacrificing quality. Production deployments using BetterTogether report 60-80% cost reductions while maintaining or even improving task performance.

Ecosystem Growth: Notable Community Projects and Research

The DSPy community has grown from a research project to a thriving ecosystem:

PAPILLON – A DSPy-powered medical information extraction system that achieved state-of-the-art results in the MEDIQA challenge, demonstrating DSPy’s effectiveness in high-stakes domains.

Storm – An open-source research assistant that uses DSPy to automatically generate Wikipedia-quality articles from scratch, complete with proper citations.

Production Deployments – Major tech companies and startups have begun deploying DSPy-based systems at scale, with reported improvements in development velocity, system reliability, and cost efficiency.

Academic Research – Over 50 peer-reviewed papers have been published using or extending DSPy, covering applications from legal document analysis to scientific literature review.

How to Get Started with DSPy: A Practical Walkthrough

Getting started with DSPy is straightforward, especially if you’re already familiar with Python and basic LLM concepts. Here’s a step-by-step guide to building your first DSPy application.

Step 1: Installation and LM Configuration

Install DSPy using pip:

pip install dspy

Next, configure your language model. DSPy works with any major LM provider:

import dspy

# OpenAI

lm = dspy.LM(‘openai/gpt-4′, api_key=’your-key’)

dspy.configure(lm=lm)

DSPy supports OpenAI, Anthropic, Google, Cohere, together.ai, local models via Ollama, and any LiteLLM-compatible provider.

Step 2: Building Your First Module with Signatures

Let’s create a simple question-answering module. First, define a signature that specifies the task structure:

class QA(dspy.Signature):

“””Answer questions with short factual answers.”””

question = dspy.InputField()

answer = dspy.OutputField(desc=’often 1-5 words’)

Now create a module using this signature:

class SimpleQA(dspy.Module):

def __init__(self):

super().__init__()

self.generate_answer = dspy.ChainOfThought(QA)

def forward(self, question):

return self.generate_answer(question=question)

Notice how we use dspy.ChainOfThought to automatically add reasoning steps – no manual prompt engineering required!

Step 3: Compiling & Optimizing a Simple RAG Pipeline

Let’s build a more sophisticated RAG (Retrieval-Augmented Generation) system and optimize it:

class RAG(dspy.Module):

def __init__(self, num_passages=3):

self.retrieve = dspy.Retrieve(k=num_passages)

self.generate = dspy.ChainOfThought(‘context, question -> answer’)

def forward(self, question):

context = self.retrieve(question).passages

return self.generate(context=context, question=question)

Now compile this pipeline with an optimizer to automatically improve it:

from dspy.teleprompt import BootstrapFewShot

optimizer = BootstrapFewShot(metric=your_metric)

compiled_rag = optimizer.compile(RAG(), trainset=your_training_data)

The optimizer will automatically generate effective few-shot examples and optimize the prompts, typically achieving 20-40% better performance than the uncompiled version.

Real-World Applications and Use Cases of DSPy

DSPy excels in scenarios where traditional prompting falls short. Here are the most compelling use cases where DSPy delivers exceptional value.

Building Robust Question-Answering and RAG Systems

RAG systems are notoriously difficult to tune with traditional prompting. Different domains, document structures, and question types often require completely different prompting strategies. DSPy’s automatic optimization handles this complexity seamlessly.

Production RAG systems built with DSPy report significant improvements in answer quality and consistency. The framework automatically learns to format retrieved context optimally, select the most relevant passages, and generate answers that properly cite sources – all without manual prompt engineering.

Developing Self-Improving Chatbots and Agents

Agent systems that can use tools, maintain context, and make multi-step decisions benefit enormously from DSPy’s modular approach. Instead of crafting complex prompt chains for different agent scenarios, developers define agent behaviors as composable modules.

DSPy’s dspy.ReAct module implements the Reasoning-Acting pattern, automatically learning when to gather more information versus when to take action. The framework handles the intricate prompt engineering needed to maintain agent coherence across long conversations and complex task sequences.

Streamlining Complex Summarization and Code Generation Tasks

Document summarization and code generation often require task-specific tuning to achieve the right balance of detail, accuracy, and style. DSPy’s optimizers can automatically discover the ideal prompting strategies for your specific requirements.

For code generation, DSPy modules can be compiled to generate code that matches your style guidelines, includes appropriate error handling, and follows domain-specific best practices – all learned from your example codebase rather than manually specified in prompts.

The Future of DSPy: Roadmap and Community Direction

The DSPy project continues to evolve rapidly, with exciting developments on the horizon that will further cement its position as the leading framework for building reliable AI systems.

Insights from the Maintainers: Upcoming Features

The core development team has shared several priorities for upcoming releases:

• Native Integration with Vector Databases: Deeper integration with popular vector databases to streamline RAG development

• Advanced Caching Strategies: Intelligent caching to dramatically reduce costs and latency in production

• Multi-Task Learning: Ability to jointly optimize across multiple related tasks

• Enhanced Debugging Tools: More sophisticated visualization and analysis capabilities for understanding pipeline behavior

• Production Deployment Utilities: Better tooling for monitoring, A/B testing, and gradual rollouts

Why DSPy Represents the Future of Compound AI Systems

As AI applications grow more sophisticated, the industry is moving away from single-model, single-prompt approaches toward compound AI systems – architectures that combine multiple models, retrieval systems, tools, and reasoning patterns.

DSPy is uniquely positioned to lead this transformation. Its declarative programming model and automatic optimization capabilities scale naturally to arbitrarily complex systems. While traditional prompt engineering becomes exponentially more difficult as system complexity increases, DSPy’s modular approach makes it easier to build, understand, and maintain sophisticated AI applications.

The framework embodies a fundamental principle: AI systems should be built like software, not like art projects. As the field matures, this engineering-first approach will become the industry standard, and DSPy is paving the way.

Frequently Asked Questions

What is DSPy in simple terms?

DSPy is a Python framework that lets you build AI applications by describing what you want them to do (declaratively) rather than manually writing prompts. It automatically optimizes your AI system to work better with your specific data and task requirements.

How is DSPy different from LangChain or traditional prompt engineering?

While LangChain focuses on chaining together different LLM calls and tools, DSPy focuses on automatically optimizing those calls. Traditional prompt engineering requires manual trial-and-error, while DSPy uses algorithms to discover optimal prompts and configurations automatically. DSPy also makes your code portable across different models without rewriting prompts.

Is DSPy worth learning? What are its main benefits?

Yes, especially if you’re building production AI systems. The main benefits are: dramatically faster development (no manual prompt tuning), more reliable systems (automatic optimization finds better solutions), easier maintenance (modular code that’s portable across models), and better performance (optimizers often beat hand-crafted prompts).

What are the best use cases for DSPy?

DSPy excels at: question-answering systems, RAG pipelines, chatbots and agents, text classification, summarization, code generation, and any task requiring multi-step reasoning or tool use. It’s particularly valuable when you need consistent performance across different models or domains.

How do DSPy optimizers like MIPROv2 actually work?

Optimizers work by evaluating your AI pipeline against your training data and specified metrics, then automatically adjusting prompts, examples, and configurations to improve performance. MIPROv2 specifically uses a meta-learning approach to propose different instruction strategies, empirically tests them, and selects the best-performing variants.

Can I use DSPy with local/open-source models (like Llama or Ollama)?

Absolutely! DSPy has excellent support for local models through Ollama, vLLM, and other providers. You can configure DSPy to use any model that supports a chat or completion API, including self-hosted open-source models. This makes DSPy ideal for cost-conscious or privacy-sensitive applications.

What’s the latest version of DSPy, and where can I find the release notes?

The latest version is DSPy v2.5. You can find detailed release notes and version history on the official GitHub repository at github.com/stanfordnlp/dspy. The repository also includes migration guides and changelog documentation for each release.

Conclusion

DSPy represents a fundamental paradigm shift in how we build AI applications. By moving from manual prompt engineering to declarative, optimizable systems, it addresses the core challenges that have plagued LLM application development: brittleness, lack of portability, difficulty of maintenance, and inconsistent performance.

As the framework continues to mature and the community grows, DSPy is positioned to become the standard way sophisticated AI systems are built. Whether you’re developing a simple question-answering system or a complex multi-agent application, DSPy provides the tools, abstractions, and automatic optimization capabilities you need to succeed.

WORLD

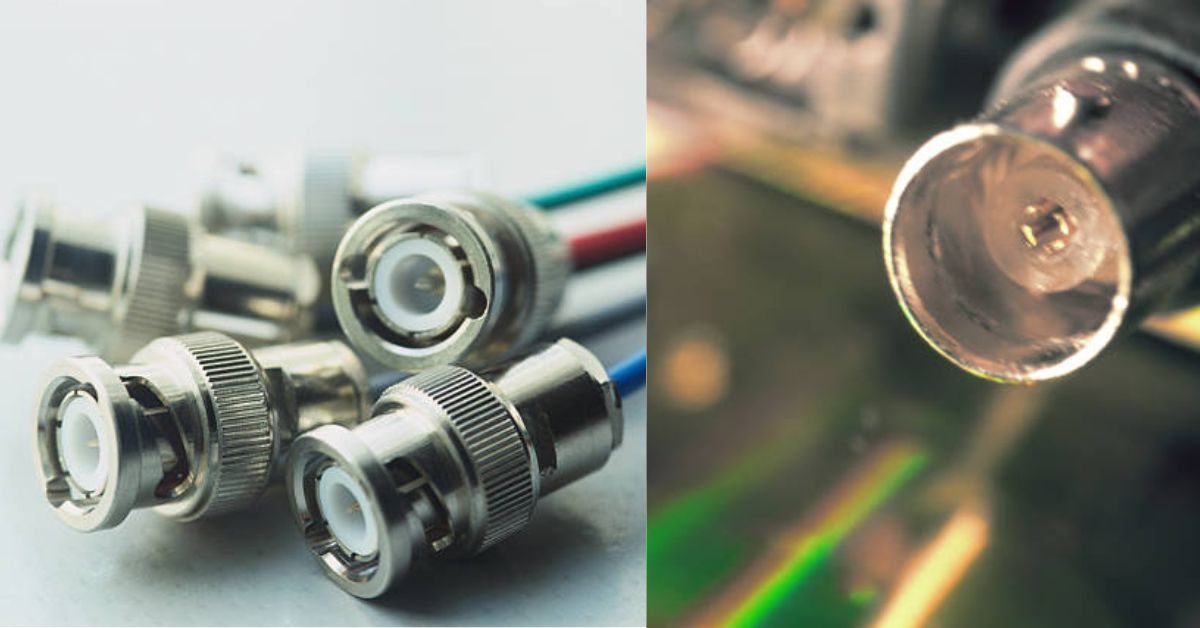

How to Design a Right Angle BNC Connector in KiCad: A Complete Guide

How to Design a Right Angle BNC Connector in KiCad Designing RF circuits on PCBs requires careful attention to component selection and layout. Right angle BNC connectors are essential for space-saving designs while maintaining excellent signal integrity for oscilloscopes, video equipment, and RF test setups. This comprehensive guide walks you through creating and implementing right angle BNC connectors in KiCad, from footprint selection to final PCB layout.

Whether you’re building test equipment, video distribution systems, or RF measurement tools, understanding how to properly integrate these connectors will ensure your designs perform reliably at high frequencies.

Understanding Right Angle BNC Connectors for PCB Design

Right angle BNC connectors mount perpendicular to your PCB, offering several key advantages over straight connectors. They reduce the overall height profile of your device, improve cable routing by directing connections parallel to the board edge, and minimize mechanical stress on the board when cables are connected or disconnected.

These connectors come in two main mounting styles: through-hole technology (THT) and surface-mount (SMD). THT versions provide superior mechanical stability and are ideal for applications with frequent cable connections. They typically feature multiple pins: a center conductor for the signal, a shield connection for ground, and mechanical stability pins that secure the connector to the board.

Most RF applications use connectors designed for either 50Ω or 75Ω impedance. Video and broadcast equipment typically uses 75Ω, while test equipment and RF communications favor 50Ω. Selecting the correct impedance matching for your application is critical for signal integrity.

Common applications include bench test equipment, oscilloscope inputs, video signal distribution, RF function generators, and spectrum analyzer connections. The right angle configuration is particularly valuable when board space is limited or when you need clean cable management in rack-mounted equipment.

Step 1: Finding or Creating the Correct BNC Footprint

Option A: Using a Pre-made KiCad Library (Recommended)

For most projects, using an existing library is the fastest and most reliable approach. The official KiCad library repository contains several BNC connector footprints that have been verified by the community.

Start by opening KiCad’s Preferences menu and navigating to Manage Footprint Libraries. Check if you have the Connector_Coaxial library enabled. This library includes several right angle BNC options with proper pad sizing and mechanical clearances already defined.

Würth Elektronik provides an excellent free library for KiCad that includes their WR-BNC connector series. These footprints include comprehensive 3D models and are based directly on manufacturer specifications. You can download their library from the Würth Elektronik website and add it to your KiCad installation.

When browsing for footprints, look for part numbers that match your intended connector. Common models include the TE Connectivity 1-1337543-0 series and Amphenol RF right angle jacks. The footprint name should clearly indicate whether it’s designed for THT mounting and specify any unique characteristics.

Option B: Building a Custom Footprint from a Datasheet

Sometimes you need a specific connector model that doesn’t have a pre-existing KiCad footprint. Creating a custom footprint requires careful interpretation of the manufacturer datasheet, but the process ensures perfect compatibility with your chosen component.

Begin by obtaining the official datasheet for your connector. Search for the mechanical drawing section, which typically shows a top-down view with all pad locations dimensioned. Look for critical specifications including the center conductor pin position, shield pin locations, mounting hole or stability pin positions, pad diameters for each pin type, and recommended PCB clearance areas.

Open KiCad’s Footprint Editor and create a new footprint. Name it clearly using the manufacturer part number. Set your grid to 0.5mm or finer for precise pad placement. Most datasheets dimension from a reference point, usually the center of the main signal pin.

Start by placing the center conductor pad. This is your signal connection and typically requires a specific pad size to ensure proper impedance transition. The datasheet will specify both the finished hole size and the pad diameter. BNC connectors often use a 1.0mm to 1.3mm hole with a 2.0mm to 2.5mm pad.

Next, add the shield pins. These provide the ground connection and are critical for RF performance. They’re usually positioned symmetrically around the center pin. The shield connection must have low impedance to your ground plane, so ensure adequate pad size and proper via placement in your final layout.

Many right angle BNC connectors include mechanical stability pins marked as P1*2 or similar in the datasheet. These are larger pins or mounting posts that don’t carry signal but prevent the connector from rotating or lifting during use. Place these as through-hole pads with the dimensions specified in the datasheet. While these should connect to ground for best shielding, they’re not the primary electrical ground path.

Add a courtyard outline that extends beyond the connector body by at least 0.25mm. This helps KiCad’s design rule checker prevent component overlap. Draw the fabrication layer outline showing the connector’s physical footprint, which helps during assembly and troubleshooting.

Include reference designator and value text on the silkscreen layer, positioned where they won’t be covered by the connector body. Save your footprint with a descriptive name including the manufacturer and part number.

Step 2: Assigning the Footprint in the Schematic

Creating the schematic symbol and linking it to your footprint is where many designers encounter confusion. The key is understanding which pins carry signals versus mechanical functions.

In KiCad’s schematic editor, place a BNC connector symbol from the Connector library. The standard symbol shows two pins: the center conductor (signal) and the shield (ground). This simplified representation works well for most applications.

Right-click the symbol and select Properties, then navigate to the Footprint assignment. Browse to your chosen footprint, whether from a library or your custom creation. Click OK to establish the link.

Here’s the critical part that trips up many users: your schematic symbol has two electrical connections, but your physical footprint may have four, five, or more pads. KiCad handles this through pin mapping in the footprint definition. The center conductor schematic pin maps to the physical center pin. The shield schematic pin maps to all shield pads and mechanical support pins simultaneously.

When you generate the netlist, KiCad will connect all pads marked as shield or mechanical support to your ground net. This is the correct behavior. The large mechanical pads labeled P1*2 in many datasheets exist for physical stability but are electrically connected to ground to maintain shielding effectiveness.

If you’re working with a more complex connector that has separate shield and mechanical ground pins, you may need to modify the schematic symbol to include additional pins or verify that the footprint’s pin definitions match your expectations.

Step 3: PCB Layout and Routing Best Practices

Placing the Connector and Managing Constraints

Right angle BNC connectors mount at the board edge with the connector body extending beyond the PCB outline. Position your connector so the mounting pads are on the board but the keep-out area extends past the edge cut.

Maintain adequate clearance from other components. High-frequency signals are sensitive to nearby conductors, so keep at least 5mm clearance from adjacent circuitry where possible. Check your connector’s datasheet for recommended keep-out zones on both the top and bottom layers.

Consider mechanical access. Users need to plug and unplug cables, so ensure nothing blocks the connector opening. Panel-mounted designs should align the connector with the opening in your enclosure.

Routing the RF Trace for Optimal Performance

The trace connecting your BNC center pin to your circuit determines your signal integrity. For a 50Ω impedance, calculate the required trace width based on your PCB stackup. KiCad includes a PCB Calculator tool under the Tools menu that computes controlled impedance trace dimensions.

For a typical 1.6mm FR4 board with 1oz copper, a 50Ω microstrip trace on the surface layer is approximately 3mm wide with a solid ground plane on the layer beneath. Verify this calculation with your specific board parameters.

Route your RF trace as short and straight as possible. Every bend, via, and discontinuity creates impedance changes that degrade signal quality. If you must turn, use 45-degree angles rather than 90-degree corners to minimize reflections.

Maintain a continuous ground plane beneath your entire signal path. The return current flows in the ground plane directly under your signal trace. Any gaps or splits in this plane force current to take longer paths, increasing inductance and degrading performance.

Minimize vias in the RF path. If you must change layers, place a ground via immediately adjacent to the signal via. This provides a short return path and maintains the impedance as closely as possible. Use multiple ground vias around your connector’s shield pins to create a low-impedance connection to your ground plane.

Connect the shield pins to your ground plane with generous copper pours or multiple vias. The shield connection should have the lowest possible impedance at your operating frequency. For frequencies above 1 GHz, consider stitching vias around the connector area to create a virtual shield wall.

Common Pitfalls and How to Avoid Them

One frequent mistake is selecting a footprint that doesn’t match the physical connector. Always cross-reference the manufacturer part number between your component and the KiCad footprint. Even small dimensional errors can make a connector impossible to mount or result in poor solder joints.

Poor ground connections cause more RF problems than any other single issue. Ensure your shield pins connect to a solid ground plane with multiple vias. A single thin trace to ground creates inductance that degrades shielding effectiveness. The shield should connect to ground with an impedance of less than one ohm across your frequency range.

Ignoring mechanical support pins leads to reliability problems. Those large pads marked as mechanical or mounting pins prevent the connector from rotating or lifting when cables are connected. While they’re not the primary electrical path, they should still connect to ground and must be soldered properly.

Inadequate PCB edge clearance prevents proper connector mounting. Check your board manufacturer’s edge clearance requirements and ensure your connector pads are sufficiently far from the board edge to avoid manufacturing issues. Typically, maintain at least 0.5mm from the edge cut to any pad or trace.

During the testing phase, verify continuity between your circuit ground and the connector shield using a multimeter. Poor shield grounding often doesn’t cause obvious failures but degrades signal quality and increases susceptibility to interference.

Neglecting impedance control results in signal reflections and reduced bandwidth. Calculate your trace width correctly and maintain consistent geometry throughout the signal path. Even a short section of mismatched impedance can cause significant signal degradation at high frequencies.

Recommended Right Angle BNC Connector Models

| Manufacturer | Part Number | Description | Key Specifications | Typical Use |

|---|---|---|---|---|

| Würth Elektronik | 60711002241501 | WR-BNC THT Right Angle Jack | 50Ω, THT, 0-4 GHz | General RF applications |

| TE Connectivity | 1-1337543-0 | Right Angle PCB Jack | 50Ω, THT, 0-3 GHz | Test equipment, instruments |

| Amphenol RF | 031-6575-RFX | Right Angle BNC Jack | 75Ω, THT, 0-1 GHz | Video, broadcast equipment |

| Cinch Connectivity | 415-0028-024 | Right Angle PCB Mount | 50Ω, THT, DC-4 GHz | Industrial RF systems |

| Rosenberger | 51K253-400N5 | High Performance BNC | 50Ω, THT, DC-6 GHz | Precision measurement |

These connectors are widely available through distributors including Digi-Key, Mouser Electronics, RS Components, and Farnell. Always verify the current availability and specifications with your preferred supplier, as manufacturers occasionally revise designs or introduce improved versions.

Frequently Asked Questions

What is the SHIELD pin on a BNC connector footprint?

The SHIELD pin is the primary ground connection on your BNC connector. It connects to the outer conductor of the coaxial cable and must tie directly to your PCB’s ground plane. This shield provides electromagnetic shielding that prevents interference from entering or leaving your signal path. Without a proper shield connection, your RF performance will suffer from noise and potential oscillation. Connect the shield to ground using multiple vias and a large copper area to minimize impedance.

What do the extra large pads (P1*2) mean on my BNC footprint?

These pads are mechanical stability or mounting pins. They’re not part of the primary electrical signal path but provide physical support to prevent the connector from rotating, tilting, or pulling away from the PCB during cable insertion and removal. While they should be connected to ground for electromagnetic shielding purposes, they don’t need to carry significant current. The larger size accommodates bigger pins that provide mechanical strength. Always solder these pins even though they’re not electrically critical, as they’re essential for reliability.

Can I use a surface-mount (SMD) right-angle BNC connector?

Yes, SMD right angle BNC connectors are available and suitable for higher-frequency applications where you want to minimize the via transitions to internal layers. SMD versions typically have lower profile and can be assembled with automated pick-and-place equipment. However, they offer less mechanical stability than THT versions, making them less suitable for applications with frequent cable connections. For bench test equipment that sees daily use, THT remains the better choice. For embedded systems with semi-permanent cable connections, SMD can work well.

How do I ensure good signal integrity with a right-angle BNC on my PCB?

Good signal integrity requires controlled impedance throughout your signal path. Start by calculating the correct trace width for your target impedance using your board stackup parameters. Maintain this width consistently from the connector to your destination. Ensure a continuous ground plane beneath the entire trace path with no gaps or splits. Minimize the trace length and avoid unnecessary vias or bends. Connect the connector shield to ground with multiple vias placed symmetrically around the connector. The shorter and more direct your signal path, the better your high-frequency performance will be.

Where can I find free KiCad libraries for BNC connectors?

The official KiCad library repository on GitHub includes Connector_Coaxial, which contains several BNC connector footprints. Many connector manufacturers provide free KiCad libraries for their products, including Würth Elektronik, TE Connectivity, and Amphenol RF. These manufacturer libraries often include 3D models and are based directly on their datasheets, ensuring accuracy. You can also find community-contributed libraries on GitHub and the KiCad forums. Always verify any third-party footprint against the actual datasheet before using it in production designs.

Conclusion

Implementing right angle BNC connectors in KiCad requires attention to detail in both footprint selection and PCB layout. By following the steps outlined in this guide, you’ll create reliable, high-performance RF connections that maintain signal integrity while saving board space.

WORLD

Monitoring Problems: Top Challenges & How to Solve Them in 2026

Monitoring Problems IT monitoring stands as the central nervous system of modern organizations, continuously tracking the health and performance of digital infrastructure. Without effective monitoring, businesses operate blind—unable to detect performance bottlenecks, predict failures, or maintain optimal system availability. The stakes are extraordinarily high: according to Gartner research, the average cost of IT downtime reaches

$42,000 per hour, a figure that excludes reputational damage and lost productivity.

Yet despite technological advances, IT teams face persistent monitoring challenges that threaten network health and business continuity. These problems span traditional network infrastructure, cloud environments, containerized applications, and IoT ecosystems. Whether you’re managing distributed networks across multiple sites, dealing with alert fatigue from noisy monitoring systems, or struggling to establish reliable performance baselines, understanding these challenges is the first step toward resolution.

This comprehensive guide examines the eight most critical monitoring problems that keep IT professionals up at night, providing actionable solutions to overcome each obstacle and ensure robust system uptime and performance.

The 8 Most Common Monitoring Problems & How to Overcome Them

1. Lack of Complete Visibility & Network Blind Spots

Network blind spots represent one of the most dangerous monitoring problems facing modern IT operations. These visibility gaps occur when monitoring systems fail to discover all network devices, leaving routers, switches, servers, or endpoints unmonitored. The consequences can be severe—an unmonitored device that fails can trigger cascading outages affecting critical business services.

Blind spots typically emerge from several sources: manual device entry processes that inevitably miss assets, rapid infrastructure changes that outpace documentation efforts, shadow IT deployments that bypass standard approval workflows, and complex network architectures where devices exist across multiple locations and cloud environments.

Solution: Implement Automatic Device Discovery & Comprehensive Mapping

Modern network monitoring tools eliminate blind spots through automatic device discovery capabilities. These systems continuously scan defined IP ranges, interrogate SNMP-enabled devices, and query cloud APIs to maintain an accurate, real-time inventory of all network infrastructure. Advanced solutions like ManageEngine OpManager combine multiple discovery protocols to identify devices that might be missed by single-method approaches.

Equally important is comprehensive network visualization through topology maps and network diagrams. Layer 2 maps show physical connectivity between switches and endpoints, while Layer 3 maps display routing relationships. Business views organize devices by function or location, helping teams quickly understand dependencies and potential impact zones during outages.

The combination of automated discovery and visual mapping transforms network administration from reactive firefighting to proactive management, ensuring complete visibility across the entire infrastructure.

2. The Balancing Act: Active vs. Passive Monitoring

Organizations often struggle to choose between active monitoring and passive monitoring approaches, yet this represents a false dichotomy. Each methodology offers distinct advantages: active monitoring provides real-time status through continuous polling and synthetic transactions, while passive monitoring analyzes actual network traffic and user experience without generating additional load.

Active monitoring excels at immediate fault detection. By continuously querying devices via SNMP, ICMP, or API calls, it can instantly detect when a device stops responding or crosses a performance threshold. However, this approach generates monitoring traffic that can become problematic at scale and may miss intermittent issues that occur between polling intervals.

Passive monitoring captures actual user experience and traffic patterns without affecting network performance. It processes SNMP traps, syslog messages, and flow data to build comprehensive performance baselines and identify trends. However, it operates reactively, detecting problems only after they impact production traffic.

Solution: Adopt a Tool That Supports Hybrid Monitoring

The most effective monitoring strategies combine both methodologies into a unified hybrid approach. Modern monitoring platforms integrate active polling for real-time fault notification with passive analysis of traffic patterns and performance baselines. This combination provides proactive alerts when thresholds are exceeded while simultaneously building historical context for capacity planning and trend analysis.

For example, active monitoring might immediately alert when a router’s CPU utilization exceeds 90%, while passive monitoring reveals that this threshold is regularly reached during morning login hours, indicating a capacity planning issue rather than an acute fault. Together, these approaches deliver both rapid incident response and strategic infrastructure insights.

3. Alert Fatigue and Ineffective Notifications

Alert fatigue has become one of the most insidious monitoring problems, undermining the effectiveness of even sophisticated monitoring systems. When monitoring tools generate excessive or irrelevant alerts, IT teams become desensitized, leading to critical notifications being ignored amid the noise. Studies show that technicians who receive hundreds of daily alerts begin treating all warnings with equal skepticism, potentially missing genuine emergencies.

This problem stems from several root causes: overly aggressive threshold settings that trigger on minor fluctuations, lack of context-aware alerting that fails to distinguish between normal variation and actual problems, duplicate alerts from correlated events that flood notification channels, and poorly configured notification profiles that route all alerts to everyone regardless of severity or responsibility.

Solution: Configure Smart, Threshold-Based Alert Profiles

Addressing alert fatigue requires intelligent notification configuration that balances completeness with relevance. Start by implementing adaptive thresholds that learn normal performance baselines and alert only on statistically significant deviations. Modern monitoring tools use machine learning to understand typical behavior patterns, reducing false positives from expected daily fluctuations.

Create role-based notification profiles that route alerts based on severity and technical expertise. Critical infrastructure failures might trigger immediate SMS notifications to senior engineers, while informational alerts generate email summaries for review during business hours. Implement alert correlation to group related events, sending a single comprehensive notification rather than dozens of individual alerts when a core switch fails and all downstream devices become unreachable.

Regular alert tuning based on team feedback ensures that notification policies evolve with infrastructure changes and operational requirements, maintaining the signal-to-noise ratio that keeps teams responsive without overwhelming them.

4. Managing Distributed and Hybrid Network Architectures

The rise of distributed work models and hybrid cloud architectures has dramatically complicated network monitoring. Organizations now operate infrastructure across corporate headquarters, remote offices, cloud platforms (AWS, Azure, Google Cloud), and edge locations. Each environment may have distinct monitoring requirements, security constraints, and connectivity characteristics.

Managing this complexity through disparate monitoring tools creates operational nightmares. Teams struggle with inconsistent visibility across environments, delayed troubleshooting when problems span on-premises and cloud resources, difficulty establishing end-to-end service monitoring, and excessive tool switching that wastes time and increases error probability.

Solution: Utilize Centralized Management for Distributed Systems

Effective management of distributed networks requires a centralized monitoring platform that provides a single pane of glass view across all environments. This unified approach aggregates data from network devices, servers, applications, and cloud services into consolidated dashboards that reveal the complete infrastructure status at a glance.

Deploy distributed polling engines at remote sites that perform local monitoring and forward summarized data to the central management console. This architecture reduces WAN bandwidth consumption while maintaining comprehensive visibility. The central platform correlates events across locations, identifying patterns that might indicate systemic issues rather than isolated failures.

Cloud-native monitoring capabilities should integrate seamlessly with on-premises infrastructure monitoring, treating cloud resources as natural extensions of the corporate network. This approach ensures consistent monitoring policies, unified alerting, and comprehensive reporting across hybrid architectures.

5. Difficulty in Setting and Tracking Performance Baselines

Performance baselines serve as the foundation for effective monitoring, establishing normal operational parameters against which anomalies can be detected. However, creating and maintaining accurate baselines presents significant challenges. Manual baseline establishment proves time-consuming and error-prone, often capturing atypical conditions that skew future analysis.

Network behavior varies dramatically based on time of day, day of week, and seasonal business cycles. A baseline established during summer may be irrelevant during year-end processing. Infrastructure changes, application updates, and business growth continuously shift performance characteristics, rendering static baselines obsolete. Without accurate baselines, teams cannot distinguish between normal traffic patterns and emerging problems.

Solution: Leverage AI-Powered Baseline Automation

Modern monitoring platforms employ artificial intelligence and machine learning to automate baseline establishment and maintenance. These systems continuously analyze metrics across extended periods, identifying typical patterns while accounting for daily and weekly cycles. AI algorithms recognize when performance characteristics shift due to legitimate infrastructure changes versus actual problems.

Automated baseline systems dynamically adjust thresholds as network conditions evolve. When bandwidth utilization gradually increases due to business growth, the system recognizes this as a trend rather than triggering alerts on each incremental change. Conversely, sudden deviations from established patterns trigger immediate investigation.

This approach eliminates manual baseline management while providing more accurate anomaly detection. IT teams focus on investigating genuine problems rather than maintaining threshold configurations, significantly improving operational efficiency.

6. Inadequate Capacity Planning and Forecasting

Capacity planning failures represent costly monitoring problems that result in unexpected outages and emergency infrastructure purchases. Without effective forecasting, organizations either over-provision resources (wasting budget on unused capacity) or under-provision (experiencing performance degradation and downtime).

Traditional approaches rely on periodic manual analysis of utilization reports, often conducted quarterly or annually. This infrequent review fails to capture growth trends or identify impending capacity constraints until users report performance problems. By the time capacity issues become visible through user complaints, service quality has already suffered.

Solution: Use Historical Data for Predictive Forecasting

Advanced monitoring platforms transform capacity planning through automated forecasting based on historical trends. These systems continuously track resource utilization—bandwidth, storage, compute, memory—and project future requirements using statistical analysis and machine learning models.

Forecasting engines account for growth patterns, seasonal variations, and infrastructure changes to predict when capacity thresholds will be reached. Visual trend reports show projected resource exhaustion dates, enabling proactive upgrades before performance degrades. This approach prevents emergency purchases and service disruptions while optimizing infrastructure investments.

Organizations can model different growth scenarios—aggressive expansion, steady growth, optimization initiatives—to understand capacity implications and budget accordingly. This strategic visibility transforms capacity planning from reactive crisis management to proactive resource optimization.

7. The Complexity of Modern Stacks: Cloud, Containers, & IoT

Modern IT infrastructure has evolved far beyond traditional networks of physical servers and routers. Today’s environments encompass public cloud services, containerized applications running in Kubernetes clusters, serverless functions, microservices architectures, edge computing nodes, and vast IoT device fleets. Each technology introduces unique monitoring challenges that traditional network monitoring tools cannot address.

Cloud environments present dynamic resource allocation challenges where virtual machines and services scale automatically based on demand. Container platforms feature ephemeral workloads that appear and disappear within seconds, making traditional device-centric monitoring ineffective. IoT deployments involve thousands or millions of low-power devices with limited monitoring capabilities. Application performance monitoring requires visibility into code-level execution, API dependencies, and user transaction flows.

Solution: Choose an Integrated, Stack-Agnostic Monitoring Platform

Addressing modern infrastructure complexity requires monitoring platforms specifically designed for heterogeneous environments. These integrated solutions provide unified visibility across traditional networks, cloud platforms, container orchestration systems, and IoT deployments through a single management interface.

For cloud monitoring, effective tools integrate with native cloud provider APIs to track resource utilization, service health, and cost optimization opportunities. Container monitoring solutions understand pod lifecycles, track resource allocation across cluster nodes, and monitor service mesh communications. IoT monitoring platforms aggregate telemetry from distributed sensors while managing the unique challenges of constrained devices and intermittent connectivity.

Application performance monitoring (APM) capabilities provide code-level visibility, tracing requests across distributed microservices to identify performance bottlenecks. Combined with infrastructure monitoring, APM reveals the complete picture from physical network through to application code, enabling comprehensive root cause analysis.

By selecting platforms that natively support multiple technology stacks, organizations avoid the operational burden of managing disconnected monitoring tools, ensuring consistent visibility regardless of infrastructure diversity.

8. Skills Gap and Tool Overload for IT Teams

Even the most sophisticated monitoring technology fails without skilled teams to interpret data and respond effectively. Organizations face a persistent skills gap as monitoring complexity outpaces training and expertise development. Network administrators must now understand cloud architectures, DevOps engineers need network troubleshooting skills, and security teams require visibility into application behavior.

Tool proliferation compounds this challenge. IT departments often accumulate specialized monitoring solutions for different technology domains—separate tools for networks, servers, applications, databases, cloud services, and security. Each tool requires distinct expertise, creates information silos, and increases operational complexity. Teams waste time switching between interfaces, correlating data manually, and maintaining multiple platforms.

Solution: Prioritize Intuitive Interfaces and Cross-Team Collaboration Features

Addressing the human element of monitoring problems requires tools designed for usability and collaboration. Modern monitoring platforms feature intuitive dashboards that present complex data through clear visualizations, reducing the learning curve for new team members. Role-based views customize the interface for different expertise levels, showing network engineers detailed topology information while giving executives high-level service status summaries.

Effective collaboration features facilitate communication between traditionally siloed teams. Shared dashboards provide common visibility for DevOps, NetOps, and SecOps teams. Integrated ticketing systems automatically create incident records with relevant monitoring data, eliminating manual information transfer. Annotation capabilities allow teams to mark events on performance charts, building institutional knowledge about infrastructure behavior.

Consolidating monitoring into unified platforms reduces tool sprawl and simplifies operations. Rather than maintaining expertise across five specialized solutions, teams develop deep knowledge of a comprehensive platform that addresses multiple monitoring domains. This consolidation improves efficiency while reducing licensing and training costs.

How to Choose the Right Tool to Solve These Monitoring Problems

Selecting an appropriate monitoring solution requires careful evaluation against the challenges outlined above. The right tool should address your organization’s specific monitoring problems while scaling to accommodate future growth. Consider the following essential criteria when evaluating monitoring platforms:

Essential Features Checklist

- Automatic Device Discovery: The platform must continuously discover and inventory network devices, servers, and cloud resources without manual intervention, eliminating blind spots through multi-protocol scanning capabilities.

- Custom Dashboards and Visualization: Comprehensive visualization capabilities including network topology maps, customizable dashboards for different roles, and real-time performance graphs that reveal infrastructure relationships and dependencies.

- Smart Alerting with Adaptive Thresholds: Intelligent notification systems that learn baseline behavior, reduce false positives through statistical analysis, and route alerts based on severity and technical responsibility.

- Scalability and Distributed Architecture: Architecture supporting distributed polling engines for multi-site monitoring, centralized management consoles, and the capacity to monitor thousands of devices without performance degradation.

- Multi-Technology Support: Unified visibility across traditional networks, server infrastructure, cloud platforms (AWS, Azure, Google Cloud), container environments, and application performance, eliminating the need for multiple specialized tools.

- Capacity Planning and Forecasting: Historical trend analysis with predictive forecasting that projects resource exhaustion dates, enabling proactive infrastructure upgrades before performance impacts occur.

- Trial or Demo Options: Availability of free trials or demonstrations allowing evaluation in your specific environment, ensuring the solution addresses your unique monitoring challenges before committing to purchase.

Prioritize solutions that excel across these dimensions while offering intuitive interfaces that reduce training requirements and facilitate cross-team collaboration. The ideal monitoring platform should solve today’s problems while adapting to tomorrow’s infrastructure evolution.

Frequently Asked Questions (FAQ)

What is the biggest challenge in network monitoring?

Most IT professionals identify achieving complete visibility while avoiding alert fatigue as the paramount monitoring challenge. Organizations struggle to maintain comprehensive infrastructure awareness across distributed and hybrid environments without overwhelming teams with excessive notifications. This dual challenge requires balancing thorough monitoring coverage with intelligent alert filtering that preserves team responsiveness.

How can I reduce false alerts in my monitoring system?

Reducing false alerts requires implementing adaptive thresholds that understand normal performance patterns through machine learning analysis. Configure custom notification profiles that route alerts based on severity and technical expertise, ensuring critical issues reach appropriate responders immediately while informational alerts generate periodic summaries. Alert correlation capabilities that group related events into single notifications further reduce noise while maintaining awareness of systemic problems.

What are the cost implications of poor monitoring?

Poor monitoring carries substantial financial consequences beyond the commonly cited $42,000 per hour average IT downtime cost reported by Gartner research. Organizations experience additional losses from missed service level agreements resulting in contractual penalties, emergency infrastructure purchases at premium pricing, overtime expenses during incident resolution, customer churn following service disruptions, and long-term reputational damage that impacts future revenue. Effective monitoring represents insurance against these cascading costs.

Can a single tool monitor both network and cloud infrastructure?

Yes, modern integrated monitoring platforms provide unified visibility across on-premises networks, traditional servers, and public cloud services through single management interfaces. These solutions integrate with cloud provider APIs to monitor virtual machines, containerized applications, serverless functions, and cloud-native services alongside traditional infrastructure. This unified approach eliminates the operational burden of managing separate network and cloud monitoring tools while providing comprehensive visibility for hybrid architectures.

How important is capacity planning in monitoring?

Capacity planning represents a critical monitoring function that prevents unexpected performance degradation and costly emergency upgrades. Without predictive forecasting, organizations risk sudden service quality deterioration when resource limits are reached during growth periods, resulting in emergency procurement at premium prices and rushed implementation increasing error probability. Effective capacity planning through continuous trend analysis enables proactive infrastructure investments that maintain performance while optimizing budget allocation and avoiding business disruption.

Conclusion: Transforming Monitoring Challenges Into Operational Excellence

The monitoring problems examined throughout this guide—from network blind spots to alert fatigue, from distributed architecture complexity to skills gaps—represent substantial obstacles to IT operational excellence. However, each challenge presents opportunities for improvement through strategic technology selection and thoughtful implementation.

Success requires moving beyond reactive troubleshooting toward proactive infrastructure management. Automated device discovery eliminates blind spots, intelligent alerting preserves team responsiveness, and predictive forecasting prevents capacity crises. Unified monitoring platforms that span networks, servers, applications, and cloud infrastructure provide the comprehensive visibility necessary for effective operations in modern hybrid environments.

SCIENCE8 months ago

SCIENCE8 months agoThe Baby Alien Fan Bus Chronicles

BUSINESS8 months ago

BUSINESS8 months agoMastering the Art of Navigating Business Challenges and Risks

WORLD6 months ago

WORLD6 months agoMoney Heist Season 6: Release Date, Cast & Plot

BUSINESS5 months ago

BUSINESS5 months agoTop Insights from FintechZoom.com Bitcoin Reports

WORLD8 months ago

WORLD8 months agoRainwalkers: The Secret Life of Worms in the Wet

WORLD8 months ago

WORLD8 months agoRainborne Royals: The Rise of Winged Termites

BUSINESS8 months ago

BUSINESS8 months agoNewport News Shipbuilding Furloughs Hit Salaried Workers

FOOD7 months ago

FOOD7 months agoBFC Monster Energy: Legendary Power Can Shocks Fans – 32